Comparing Replication Rates in Econ and Psych

Jun 30, 2016 | Categorized In: Science

Contests between fields area always fun for observers. First came the Many Labs study, which found that only 36% of psychology studies could be replicated. Recently, Camerer et al. (2016) reported that for experimental economics, the replication rate was 61%.

However, given that there were only 18 studies in the experimental econ set, a natural question is if the difference is “statistically significant”? A couple of psychology blogs (here and here) asked this question, and (maybe not surprisingly) came away with the answer of “no”. Euphemistically, one can say that the effects were “verging” on significance. But in this day and age, “verging” has a bit of a pejorative sense. Here is a strongly worded summary:

Source: brainsidea.wordpress.com

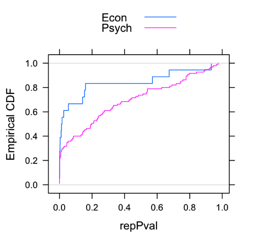

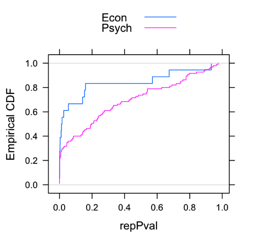

One thing I didn’t like from the above analyses was the binary split at p=0.05. To get a clearer idea of their distributions, I plotted the empirical CDF of the p-values. Beyond the fact that there were about twice the mass at p<0.05 for economics versus psychology, there was a clear rightward shift in the p-value distribution, i.e., p-values in psychology appear to be systematically larger.

Now if the question was, are there differences between these two sets of p-values (and I think there are legitimate reasons to ask this question, which I can get into in the future), one natural test is the non-parametric Kolmogorov-Smirnov test that looks at whether two distributions are significantly different from each other. And consistent with eyeballing the data, the difference is significant at 0.05 level.

Now if the question was, are there differences between these two sets of p-values (and I think there are legitimate reasons to ask this question, which I can get into in the future), one natural test is the non-parametric Kolmogorov-Smirnov test that looks at whether two distributions are significantly different from each other. And consistent with eyeballing the data, the difference is significant at 0.05 level.

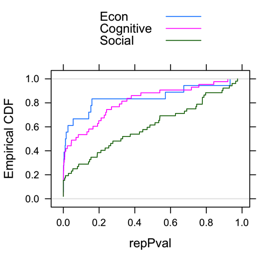

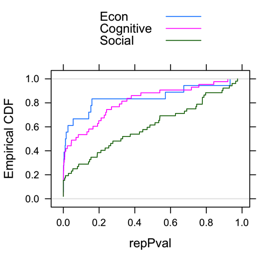

Here is the result from the K-S tests. Econ v. Cognitive: p = 0.39, Econ v. Social: p = 0.0035, and Cognitive v. Social: p: 0.0097.

Here is the result from the K-S tests. Econ v. Cognitive: p = 0.39, Econ v. Social: p = 0.0035, and Cognitive v. Social: p: 0.0097.

So all in all, I agree that it’s not “overwhelming evidence” that economics does better than psychology in terms of reproducibility. The sample size of 18 pretty much guarantees that, but it’s not nothing. Moreover, I think there is quite a bit of evidence that there is quite a bit of variation across fields (or subfields). If we had a few dozens areas to compare across, we would be in a much better position to say what the substantive factors are. But it seems excessive to say there is no evidence.

Full Disclosure: Colin Camerer was my Ph.D. advisor, but I think this had minimal impact here. The analyses were dead easy, and I only did this because it was curious that people got so worked up about a marginal p-value.

However, given that there were only 18 studies in the experimental econ set, a natural question is if the difference is “statistically significant”? A couple of psychology blogs (here and here) asked this question, and (maybe not surprisingly) came away with the answer of “no”. Euphemistically, one can say that the effects were “verging” on significance. But in this day and age, “verging” has a bit of a pejorative sense. Here is a strongly worded summary:

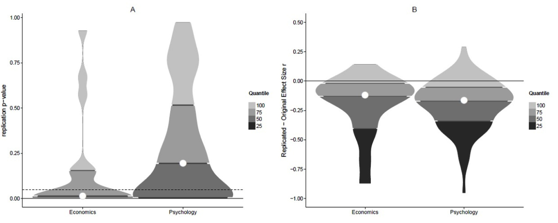

Still, it’s hard not to conclude that there are some differences between fields when one looks at the distribution of p-values such as the panel on the right from the figure below.Our analysis suggests that the results from the two project provide no evidence for or against the claim that economics has a higher rate of reproducibility than psychology.

Source: brainsidea.wordpress.com

One thing I didn’t like from the above analyses was the binary split at p=0.05. To get a clearer idea of their distributions, I plotted the empirical CDF of the p-values. Beyond the fact that there were about twice the mass at p<0.05 for economics versus psychology, there was a clear rightward shift in the p-value distribution, i.e., p-values in psychology appear to be systematically larger.

Two-sample Kolmogorov-Smirnov test

D = 0.3807, p-value = 0.0249

alternative hypothesis: two-sided

Are All Psychology Studies Created Equal?

What’s more interesting is looking at subfields. Although the economics replication studies was too small, the Many Labs replication had about 50% each of social and cognitive psychology. If one looked at the distribution of these three groups, an interesting pattern emerges. Whereas the distribution of p-values from cognitive looks like a smoother version of the ones for economics, the one for social psych looks much flatter.

So all in all, I agree that it’s not “overwhelming evidence” that economics does better than psychology in terms of reproducibility. The sample size of 18 pretty much guarantees that, but it’s not nothing. Moreover, I think there is quite a bit of evidence that there is quite a bit of variation across fields (or subfields). If we had a few dozens areas to compare across, we would be in a much better position to say what the substantive factors are. But it seems excessive to say there is no evidence.

Full Disclosure: Colin Camerer was my Ph.D. advisor, but I think this had minimal impact here. The analyses were dead easy, and I only did this because it was curious that people got so worked up about a marginal p-value.